Are you telling the whole story with your metrics? If your metrics are not considering measures of data dispersion, you are not missing half, but rather most of the picture. So often people focus on the average (measure of central tendency) and leave off dispersion (measure of data tightness) altogether.

Imagine trading in your Toyota Camry for a Suburban or a Humvee. You come up the driveway, open the garage door and jam the new, much wider vehicle into a garage built for a compact. It doesn’t matter how well you center on the tennis ball hanging from the ceiling, at best, you’re hitting trash cans, ladders and golf clubs and probably can’t open the door.

Think of your customer specifications (Voice of the Customer or VOC) as the walls of the garage, and the process measurements of centering and dispersion (Voice or the Process) as the position and size of the vehicle you are trying to park. If you are parking a motorcycle, centering becomes virtually irrelevant, whereas, pulling the new Suburban in becomes a feat of carefully calculated maneuvers.

Pp and Ppk Indices

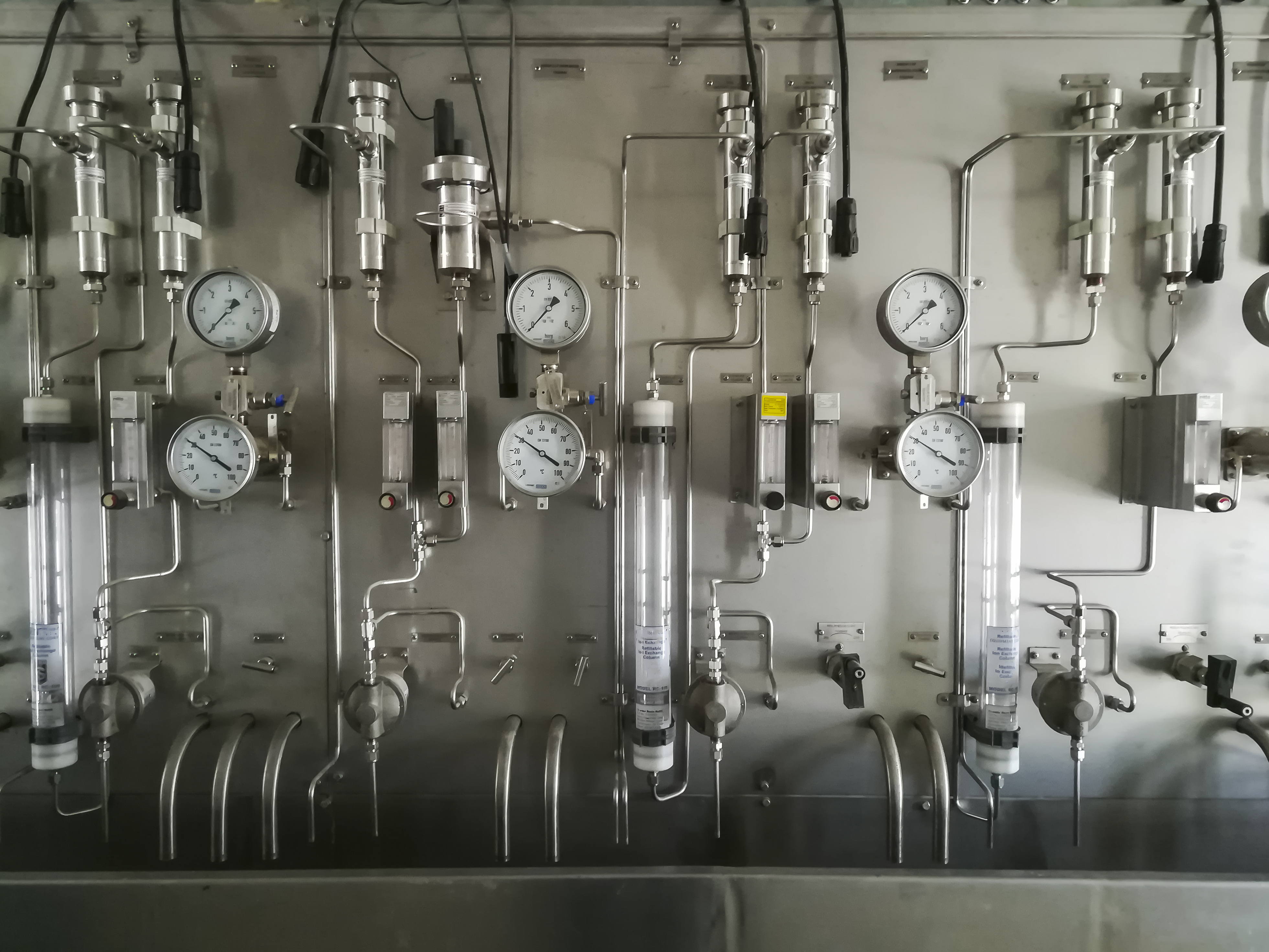

The process capability indices Pp and Ppk measure how well your data vehicle fits inside your customer specification garage. Pp is a measurement of how well the process data fits within the customer specifications (the difference between the width of the vehicle and the width of the garage). Ppk measures the distance to the nearest specification limit (how close the vehicle is to one wall or another).

One of the great things about using Pp and Ppk is, because they are indices, they allow you to compare one process’ performance against another regardless of unit of measure. A process with a Pp index of 1.43 is performing better relative to customer specifications than a process with a Pp index of 1.21…every time. So, all else being equal, Pp and Ppk can help a team decide which process requires attention first.

Calculating Pp and Ppk

You need a few bits of information to calculate Pp and Ppk. Sorry, but a little light-weight statistics are involved but fortunately, Excel can do all the heavy lifting.

- Mean (Excel: =average(X1, X2, X3, X4…)

- Standard Deviation (Excel: =stdev.s(X1, X2, X3, X4…)

- Upper Specification Limit (USL provided by customer)

- Lower Specification Limit (LSL provided by customer)

Pp (Fit)

Pp = (USL – LSL)/(6 * Standard Deviation)

So, lets break this down a tad starting with the numerator (USL-LSL). This is the distance between the upper specification limit and the lower specification limit or, the width of the garage.

The denominator, (6 * Standard Deviation) is almost the full range of all of your data (99.7%). This is based on the empirical rule which states that normally distributed data will fall within +/- 1, 2 and 3 standard deviations of the mean as follows:

- 68% within +/-1 standard deviation

- 95% within +/-2 standard deviations

- 99.7% within +/-3 standard deviations

So, for our purposes, the vehicle we are parking in the garage is 6 standard deviations wide (provided you don’t have a 2×4 sticking out the window). A Pp index of 1.5 and above means the data fits well within the specification limits with room to spare. In other words, the garage is 1.5 times the size of the car, so we’re good. Any lower and you have to take your groceries out of the back seat before parking in the garage.

Ppk (Centering)

Ppk (upper) = (USL – Mean)/(3 * Standard Deviation)

Ppk (lower) = (Mean – LSL)/(3 * Standard Deviation)

Because Ppk measures the distance to the closest limit, there are 2 formulas. Since the closest wall of the garage is the one that is going to scratch the paint of our new car, that is the only one we are concerned with. The numerators, (USL – Mean) and (Mean – LSL) tell use how far the specification limit is from the center of the data (distance between the “H” emblem on the hood of the Humvee and the closest wall).

Since we are only talking about measuring from the midpoint of the data for Ppk, we are only concerned with half of the distribution curve (so 3 standard deviations instead of 6). If the Pp index is good (>1.5) but the Ppk is low, say 1.2, that tells us that the data could fit within the specification limits but the data is not centered and is therefore in danger of exceeding one of the specification limits. Grandpa parked too close to one side of the garage.

Pp and Ppk are really valuable metrics to gauge process performance. They are often misunderstood and so get ignored despite their obvious value. Dust them off and give ’em shot in your process metrics. You may never park a car the same way again.